Pandas – Python Data Analysis Library

I’ve recently started using Python’s excellent Pandas library as a data analysis tool, and, while finding the transition from R’s excellent data.table library frustrating at times, I’m finding my way around and finding most things work quite well.

One aspect that I’ve recently been exploring is the task of grouping large data frames by different variables, and applying summary functions on each group. This is accomplished in Pandas using the “groupby()” and “agg()” functions of Panda’s DataFrame objects.

Update: Pandas version 0.20.1 in May 2017 changed the aggregation and grouping APIs. This post has been updated to reflect the new changes.

A Sample DataFrame

In order to demonstrate the effectiveness and simplicity of the grouping commands, we will need some data. For an example dataset, I have extracted my own mobile phone usage records. I analysed this type of data using Pandas during my work on KillBiller. If you’d like to follow along – the full csv file is available here.

The dataset contains 830 entries from my mobile phone log spanning a total time of 5 months. The CSV file can be loaded into a pandas DataFrame using the pandas.DataFrame.from_csv() function, and looks like this:

| date | duration | item | month | network | network_type | |

|---|---|---|---|---|---|---|

| 0 | 15/10/14 06:58 | 34.429 | data | 2014-11 | data | data |

| 1 | 15/10/14 06:58 | 13.000 | call | 2014-11 | Vodafone | mobile |

| 2 | 15/10/14 14:46 | 23.000 | call | 2014-11 | Meteor | mobile |

| 3 | 15/10/14 14:48 | 4.000 | call | 2014-11 | Tesco | mobile |

| 4 | 15/10/14 17:27 | 4.000 | call | 2014-11 | Tesco | mobile |

| 5 | 15/10/14 18:55 | 4.000 | call | 2014-11 | Tesco | mobile |

| 6 | 16/10/14 06:58 | 34.429 | data | 2014-11 | data | data |

| 7 | 16/10/14 15:01 | 602.000 | call | 2014-11 | Three | mobile |

| 8 | 16/10/14 15:12 | 1050.000 | call | 2014-11 | Three | mobile |

| 9 | 16/10/14 15:30 | 19.000 | call | 2014-11 | voicemail | voicemail |

| 10 | 16/10/14 16:21 | 1183.000 | call | 2014-11 | Three | mobile |

| 11 | 16/10/14 22:18 | 1.000 | sms | 2014-11 | Meteor | mobile |

| … | … | … | … | … | … | … |

The main columns in the file are:

- date: The date and time of the entry

- duration: The duration (in seconds) for each call, the amount of data (in MB) for each data entry, and the number of texts sent (usually 1) for each sms entry.

- item: A description of the event occurring – can be one of call, sms, or data.

- month: The billing month that each entry belongs to – of form ‘YYYY-MM’.

- network: The mobile network that was called/texted for each entry.

- network_type: Whether the number being called was a mobile, international (‘world’), voicemail, landline, or other (‘special’) number.

Phone numbers were removed for privacy. The date column can be parsed using the extremely handy dateutil library.

import pandas as pd

import dateutil

# Load data from csv file

data = pd.DataFrame.from_csv('phone_data.csv')

# Convert date from string to date times

data['date'] = data['date'].apply(dateutil.parser.parse, dayfirst=True)

Summarising the DataFrame

Once the data has been loaded into Python, Pandas makes the calculation of different statistics very simple. For example, mean, max, min, standard deviations and more for columns are easily calculable:

# How many rows the dataset data['item'].count() Out[38]: 830 # What was the longest phone call / data entry? data['duration'].max() Out[39]: 10528.0 # How many seconds of phone calls are recorded in total? data['duration'][data['item'] == 'call'].sum() Out[40]: 92321.0 # How many entries are there for each month? data['month'].value_counts() Out[41]: 2014-11 230 2015-01 205 2014-12 157 2015-02 137 2015-03 101 dtype: int64 # Number of non-null unique network entries data['network'].nunique() Out[42]: 9

The need for custom functions is minimal unless you have very specific requirements. The full range of basic statistics that are quickly calculable and built into the base Pandas package are:

| Function | Description |

|---|---|

| count | Number of non-null observations |

| sum | Sum of values |

| mean | Mean of values |

| mad | Mean absolute deviation |

| median | Arithmetic median of values |

| min | Minimum |

| max | Maximum |

| mode | Mode |

| abs | Absolute Value |

| prod | Product of values |

| std | Unbiased standard deviation |

| var | Unbiased variance |

| sem | Unbiased standard error of the mean |

| skew | Unbiased skewness (3rd moment) |

| kurt | Unbiased kurtosis (4th moment) |

| quantile | Sample quantile (value at %) |

| cumsum | Cumulative sum |

| cumprod | Cumulative product |

| cummax | Cumulative maximum |

| cummin | Cumulative minimum |

The .describe() function is a useful summarisation tool that will quickly display statistics for any variable or group it is applied to. The describe() output varies depending on whether you apply it to a numeric or character column.

Summarising Groups in the DataFrame

There’s further power put into your hands by mastering the Pandas “groupby()” functionality. Groupby essentially splits the data into different groups depending on a variable of your choice. For example, the expression data.groupby(‘month’) will split our current DataFrame by month.

The groupby() function returns a GroupBy object, but essentially describes how the rows of the original data set has been split. the GroupBy object .groups variable is a dictionary whose keys are the computed unique groups and corresponding values being the axis labels belonging to each group. For example:

data.groupby(['month']).groups.keys() Out[59]: ['2014-12', '2014-11', '2015-02', '2015-03', '2015-01'] len(data.groupby(['month']).groups['2014-11']) Out[61]: 230

Functions like max(), min(), mean(), first(), last() can be quickly applied to the GroupBy object to obtain summary statistics for each group – an immensely useful function. This functionality is similar to the dplyr and plyr libraries for R. Different variables can be excluded / included from each summary requirement.

# Get the first entry for each month

data.groupby('month').first()

Out[69]:

date duration item network network_type

month

2014-11 2014-10-15 06:58:00 34.429 data data data

2014-12 2014-11-13 06:58:00 34.429 data data data

2015-01 2014-12-13 06:58:00 34.429 data data data

2015-02 2015-01-13 06:58:00 34.429 data data data

2015-03 2015-02-12 20:15:00 69.000 call landline landline

# Get the sum of the durations per month

data.groupby('month')['duration'].sum()

Out[70]:

month

2014-11 26639.441

2014-12 14641.870

2015-01 18223.299

2015-02 15522.299

2015-03 22750.441

Name: duration, dtype: float64

# Get the number of dates / entries in each month

data.groupby('month')['date'].count()

Out[74]:

month

2014-11 230

2014-12 157

2015-01 205

2015-02 137

2015-03 101

Name: date, dtype: int64

# What is the sum of durations, for calls only, to each network

data[data['item'] == 'call'].groupby('network')['duration'].sum()

Out[78]:

network

Meteor 7200

Tesco 13828

Three 36464

Vodafone 14621

landline 18433

voicemail 1775

Name: duration, dtype: float64

You can also group by more than one variable, allowing more complex queries.

# How many calls, sms, and data entries are in each month?

data.groupby(['month', 'item'])['date'].count()

Out[76]:

month item

2014-11 call 107

data 29

sms 94

2014-12 call 79

data 30

sms 48

2015-01 call 88

data 31

sms 86

2015-02 call 67

data 31

sms 39

2015-03 call 47

data 29

sms 25

Name: date, dtype: int64

# How many calls, texts, and data are sent per month, split by network_type?

data.groupby(['month', 'network_type'])['date'].count()

Out[82]:

month network_type

2014-11 data 29

landline 5

mobile 189

special 1

voicemail 6

2014-12 data 30

landline 7

mobile 108

voicemail 8

world 4

2015-01 data 31

landline 11

mobile 160

....

Groupby output format – Series or DataFrame?

The output from a groupby and aggregation operation varies between Pandas Series and Pandas Dataframes, which can be confusing for new users. As a rule of thumb, if you calculate more than one column of results, your result will be a Dataframe. For a single column of results, the agg function, by default, will produce a Series.

You can change this by selecting your operation column differently:

# produces Pandas Series

data.groupby('month')['duration'].sum()

# Produces Pandas DataFrame

data.groupby('month')[['duration']].sum()

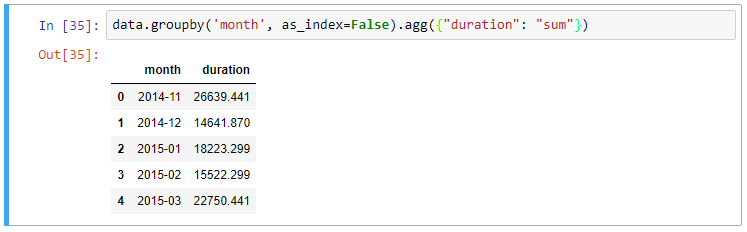

The groupby output will have an index or multi-index on rows corresponding to your chosen grouping variables. To avoid setting this index, pass “as_index=False” to the groupby operation.

data.groupby('month', as_index=False).agg({"duration": "sum"})

Multiple Statistics per Group

The final piece of syntax that we’ll examine is the “agg()” function for Pandas. The aggregation functionality provided by the agg() function allows multiple statistics to be calculated per group in one calculation.

Applying a single function to columns in groups

Instructions for aggregation are provided in the form of a python dictionary or list. The dictionary keys are used to specify the columns upon which you’d like to perform operations, and the dictionary values to specify the function to run.

For example:

# Group the data frame by month and item and extract a number of stats from each group

data.groupby(

['month', 'item']

).agg(

{

'duration':sum, # Sum duration per group

'network_type': "count", # get the count of networks

'date': 'first' # get the first date per group

}

)

The aggregation dictionary syntax is flexible and can be defined before the operation. You can also define functions inline using “lambda” functions to extract statistics that are not provided by the built-in options.

# Define the aggregation procedure outside of the groupby operation

aggregations = {

'duration':'sum',

'date': lambda x: max(x) - 1

}

data.groupby('month').agg(aggregations)

Applying multiple functions to columns in groups

To apply multiple functions to a single column in your grouped data, expand the syntax above to pass in a list of functions as the value in your aggregation dataframe. See below:

# Group the data frame by month and item and extract a number of stats from each group

data.groupby(

['month', 'item']

).agg(

{

# Find the min, max, and sum of the duration column

'duration': [min, max, sum],

# find the number of network type entries

'network_type': "count",

# minimum, first, and number of unique dates

'date': [min, 'first', 'nunique']

}

)

The agg(..) syntax is flexible and simple to use. Remember that you can pass in custom and lambda functions to your list of aggregated calculations, and each will be passed the values from the column in your grouped data.

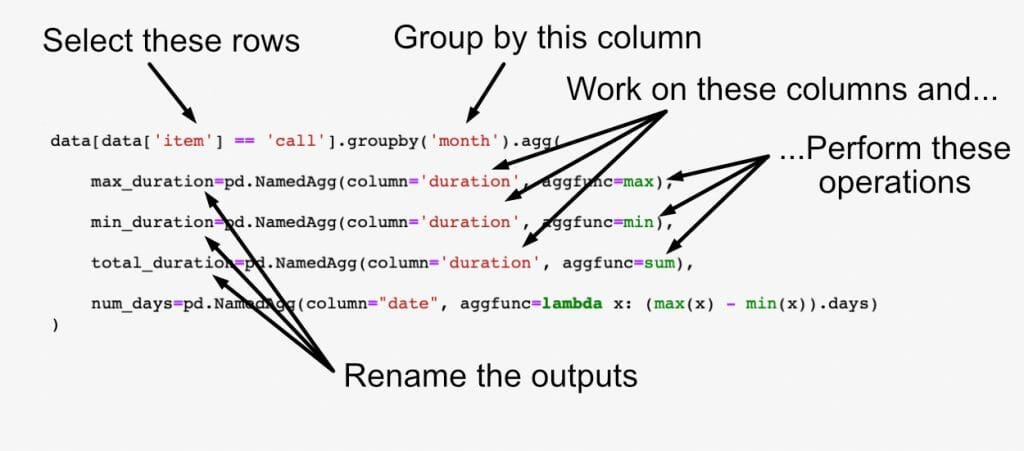

Renaming grouped aggregation columns

We’ll examine two methods to group Dataframes and rename the column results in your work.

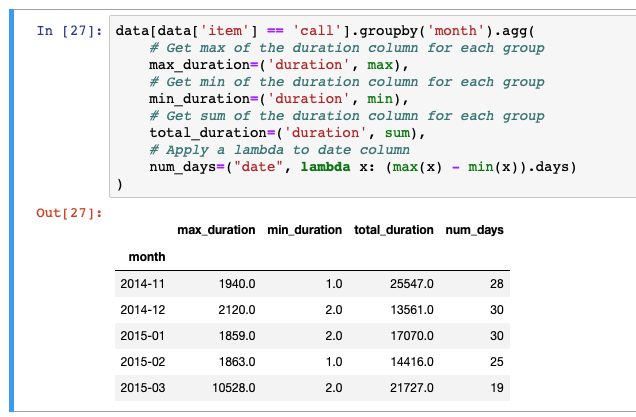

Recommended: Tuple Named Aggregations

Introduced in Pandas 0.25.0, groupby aggregation with relabelling is supported using “named aggregation” with simple tuples. Python tuples are used to provide the column name on which to work on, along with the function to apply.

For example:

data[data['item'] == 'call'].groupby('month').agg(

# Get max of the duration column for each group

max_duration=('duration', max),

# Get min of the duration column for each group

min_duration=('duration', min),

# Get sum of the duration column for each group

total_duration=('duration', sum),

# Apply a lambda to date column

num_days=("date", lambda x: (max(x) - min(x)).days)

)

For clearer naming, Pandas also provides the NamedAggregation named-tuple, which can be used to achieve the same as normal tuples:

data[data['item'] == 'call'].groupby('month').agg(

max_duration=pd.NamedAgg(column='duration', aggfunc=max),

min_duration=pd.NamedAgg(column='duration', aggfunc=min),

total_duration=pd.NamedAgg(column='duration', aggfunc=sum),

num_days=pd.NamedAgg(

column="date",

aggfunc=lambda x: (max(x) - min(x)).days)

)

Note that in versions of Pandas after release, applying lambda functions only works for these named aggregations when they are the only function applied to a single column, otherwise causing a KeyError.

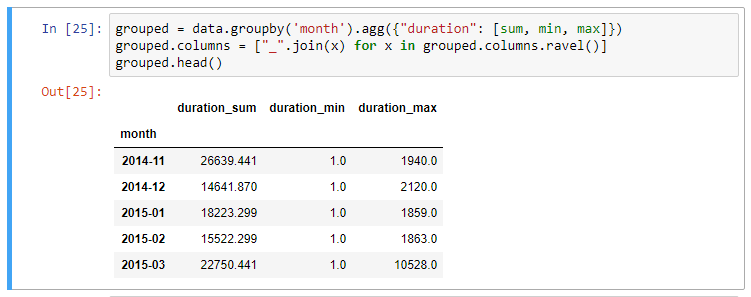

Renaming index using droplevel and ravel

When multiple statistics are calculated on columns, the resulting dataframe will have a multi-index set on the column axis. The multi-index can be difficult to work with, and I typically have to rename columns after a groupby operation.

One option is to drop the top level (using .droplevel) of the newly created multi-index on columns using:

grouped = data.groupby('month').agg("duration": [min, max, mean])

grouped.columns = grouped.columns.droplevel(level=0)

grouped.rename(columns={

"min": "min_duration", "max": "max_duration", "mean": "mean_duration"

})

grouped.head()

However, this approach loses the original column names, leaving only the function names as column headers. A neater approach, as suggested to me by a reader, is using the ravel() method on the grouped columns. Ravel() turns a Pandas multi-index into a simpler array, which we can combine into sensible column names:

grouped = data.groupby('month').agg("duration": [min, max, mean])

# Using ravel, and a string join, we can create better names for the columns:

grouped.columns = ["_".join(x) for x in grouped.columns.ravel()]

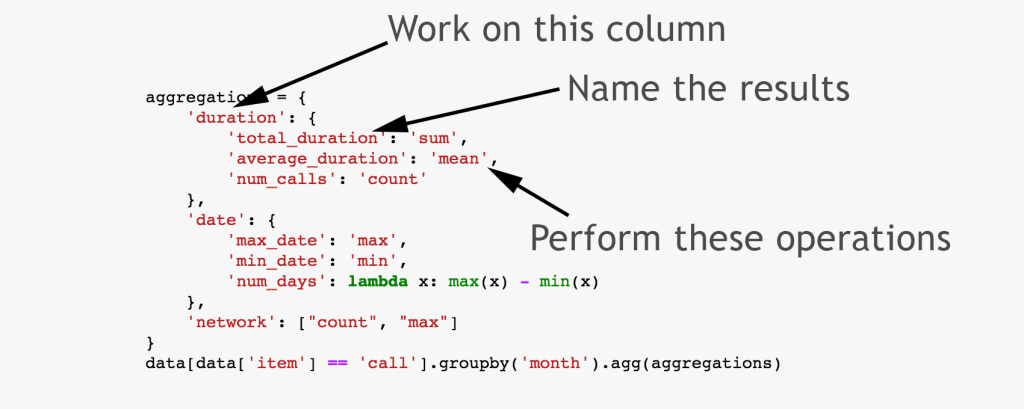

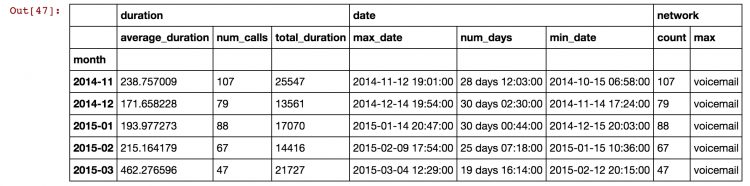

Dictionary groupby format <DEPRECATED>

There were substantial changes to the Pandas aggregation function in May of 2017. Renaming of variables using dictionaries within the agg() function as in the diagram below is being deprecated/removed from Pandas – see notes.

In older Pandas releases (< 0.20.1), renaming the newly calculated columns was possible through nested dictionaries, or by passing a list of functions for a column. Our final example calculates multiple values from the duration column and names the results appropriately. Note that the results have multi-indexed column headers.

Note this syntax will no longer work for new installations of Python Pandas.

# Define the aggregation calculations

aggregations = {

# work on the "duration" column

'duration': {

# get the sum, and call this result 'total_duration'

'total_duration': 'sum',

# get mean, call result 'average_duration'

'average_duration': 'mean',

'num_calls': 'count'

},

# Now work on the "date" column

'date': {

# Find the max, call the result "max_date"

'max_date': 'max',

'min_date': 'min',

# Calculate the date range per group

'num_days': lambda x: max(x) - min(x)

},

# Calculate two results for the 'network' column with a list

'network': ["count", "max"]

}

# Perform groupby aggregation by "month",

# but only on the rows that are of type "call"

data[data['item'] == 'call'].groupby('month').agg(aggregations)

Summary of Python Pandas Grouping

The groupby functionality in Pandas is well documented in the official docs and performs at speeds on a par (unless you have massive data and are picky with your milliseconds) with R’s data.table and dplyr libraries.

If you are interested in another example for practice, I used these same techniques to analyse weather data for this post, and I’ve put “how-to” instructions here.

There are plenty of resources online on this functionality, and I’d recommomend really conquering this syntax if you’re using Pandas in earnest at any point.

- DataQuest Tutorial on Data Analysis: https://www.dataquest.io/blog/pandas-tutorial-python-2/

- Chris Albon notes on Groups: https://chrisalbon.com/python/pandas_apply_operations_to_groups.html

- Greg Reda Pandas Tutorial: http://www.gregreda.com/2013/10/26/working-with-pandas-dataframes/

One more question, based on the output, how to change the table format to show only one column header?

The post is very helpful.

I have one question here. In the agg statement, how to get quantile 0.05, 0.25, …?

I tried quantile(.05) in agg, but always got following error:

AttributeError: ‘SeriesGroupBy’ object has no attribute ‘quantile(0.05)’

Can someone help on it? Thank you

Thank you Shanelynn. Truly appreciate for the help. ^-^

The lambda is working well in this way as you suggested.

Yet, as my aggregations are too many, so I defined a dictionary: dict, and then used in agg(dict) function.

Others like mean, std are working well, only for quantile, it always prompt error.

I tried following ways:

1. dict[‘work_column’] = ‘quantile’ – fine, but no 0.05

2. dict[‘work_column’] = ‘quantile(0.05)’ – error

3. dict[‘work_column’] = lambda x: x.quantile(0.05) – error

So if I use this way, how to solve the error?

Sorry, I made a mistake by missing out one “x” in lambda in my python program. Solution 3: dict[‘work_column’] = lambda x: x.quantile(0.05) is working now.

Thank you Shanelynn again for the help there.

I would like to convert spark dataframe to pandas data frame for below calculations. would some one help me here please.I am new to pandas dataframe.

for x in (column name list which is coming from the input parameter)

value = df.withcolumn(x,df[x]*df[column name which is coming from the input parameter]

value1 = df.groupby(column name which is coming from the input parameter).agg(*F.sum(x).alias(‘y_’+x) for x in (column name list which is coming from the input parameter),F.sum(y).alias(yy))

value2 = value1.withcolumn(‘y_’+x,value1[‘y_’+x]/valu1[yy]

Hey shanelynn, I have the same question, it cannot to check the type of the data[‘num_days’], because it is a new variable established in the aggregations. when loading the data at first, the type of the variables (except duration) are all object. Could you kindly help me solve this problems, thanks a lot.

This is really helpful! Thank you for pulling everything together.

Just found this in the documentation: If you want to be sure the output columns will be in a specific order, you can use an OrderedDict. For example instead of:

grouped.agg({‘D’: ‘std’, ‘C’: ‘mean’})

Use:

grouped.agg(OrderedDict([(‘D’, ‘std’), (‘C’, ‘mean’)]))

Maggie – this is a great find, thanks for that. I also see that Pandas has updated some of the API for grouped transactions – I’ll update the text to reflect both asap.

nice, if you could use directly the “date” columnn to do tthe operations instead of creating seperate column called ”month” 🙂 I have the same kind of ts dataset ,having problem with some calculation since it’s not supporting for my timestamp column (ex : timestamp21-06-2016 09:46:34

) directly 🙁

Hi, thanks for this post.

Just to let you know that the dict in dict column renaming feature has been deprecated by Pandas 0.20

See https://github.com/pandas-dev/pandas/pull/15931 for details, and at the bottom of the thread for kind of a workaround.

I personnaly find it unpractical to remove this feature, but the devs have already decided on this one…

Done! Thanks for this Zertrin. Came up with a neat solution.

Thanks for this Zertrin – its on my todo list to update this post for the new API changes – hopefully in the coming days to get that sorted. I don’t like the new way either!

Hi, thanks for this post

I wonder how can group the monthly temperature data accoeding to specific ranges such as (=30C) and find their percentage during the month???

Hi Balsam, Good question, and one perhaps that I should cover in the post – I might update it. You can group by arbitrary variables by passing them into the groupby function as such: “data.groupby(data[‘temp’] > 30)” and then apply any function you’d like, in your case probably something like “data.groupby([‘month’, data[‘temp’] > 30])[‘month’].count()” to get counts, and a further step afterwards to get the percentages.

Thank you for this clear post. It was of great help.

This is excellent and helped tremendously, thank you

The renaming problem has troubled me a lot, thanks for sharing this excellent work with us!

The ravel() function really helps the renaming problem after agg()! Thanks for sharing this post with us.

Plus, I found your website demonstrates a good display of both pictures and code block, may I know what tools you used?

[…] Summarising, Aggregating, and Grouping data in Python. – Pandas – Python Data Analysis Library I've recently started using Python's excellent Pandas library as a data analysis tool, and, while finding the transition […]

[…] Yukarıdaki görevler için bu web sitesinden faydalanabilirsiniz: […]

[…] Summarising, Aggregating, and Grouping data […]

[…] https://www.shanelynn.ie/summarising-aggregation-and-grouping-data-in-python-pandas/ […]

Wow, thanks! Concise and exactly what I was looking for!

Very useful article. Thanks. Another nice aggregation function is describe which will dump a table of descriptive statistics. For example:

data.groupby(‘network’)[‘duration’].describe()

Thanks Steve, good suggestion and I agree. I’ve added a note about the Pandas Describe function in the post!

Hi, I have a csv file. I need to find find mean,median and mode for few columns

Excellent resource!!!

best for me! Thanks a lot. It helps me handle data!

Thanks for the detailed tutorial. A further question..if you don’t have the month column, how to group in month ?

Hi Will, thanks for reading. You will have to have some column to group by. If you have a column with timestamps, you can round them to months before you group, or even in your groupby() call. I’d recommend looking at the Python “arrow” library for nice date time management. In particular – arrow.floor(“month”) – in your case.

[…] Yukarıdaki görevler için bu web sitesinden faydalanabilirsiniz: […]

[…] https://www.shanelynn.ie/summarising-aggregation-and-grouping-data-in-python-pandas/ Multiple aggregate measures: dfSummary = dfSummary.groupby([“symbol”, “exchange”]).agg({“spread”: “max”, “bid”:”min”}) dfSummary.columns = [ “maxSpread”, “minBid”] >>> dfSummary maxSpread minBid symbol exchange ETHBTC binance -3.160000e-02 0.003500 bitfinex -3.177800e-02 0.003522 LTCBTC binance -8.300000e-02 0.009000 bitfinex -8.570000e-02 0.009300 XRPBTC binance -1.000000e-06 0.000077 bitfinex -2.000000e-06 0.000083 >>> […]

Hi there, thanks for diving into this topic, very helpful. I am trying to do a grouping by a “virtual” column. In your case, how could you aggregate the data, voice and sms durations for every week? Or to make it more general: for every period of x days?

Thanks and best regards,

clemens

Hi Clemens, thanks for the kind words. You have two options for a “virtual” column – either actually define the column and add it to the data frame before the groupby() call, or just define it on the fly in the groupby() call itself. Perhaps it’s not clear in the post (I may change it if not), but the value in the “groupby” can be a column name, or it can actually be the values to actually group the data by.

For example, to group by the week number (accessible from

datetime.isocalendar()[1]):data.groupby(data['date'].apply(lambda x: x.isocalendar()[1])).sum()Hope this helps!

I’m using the pandas version 0.23.4. I used the rename feature using a nested dictionary (first picture of the post) in python 2.7 and python 3.6. It worked perfectly for me. Did they re introduce this feature?

in the telephone data example, if i want to find the following:

1. find the network in which maximum calls were done and on what dates

2. find the dates on which the first three maximum calling were done and through which network

3. are there any days, wherein data is consumed more than mobile usage and in what network has it happened

pls send me the codes