[Short version] The S3 ingestion script for Amazon applications provided by Logentries will not work for the gzip compressed log files produced by the Elastic Beanstalk log rotation system. A slightly edited script will work instead and can be found on Github here.[/Short Version]

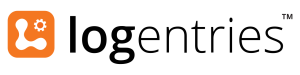

Logentries is a brilliant startup originating here in Dublin for collecting and analysing log files on the cloud, and in real time. The company was founded by Villiam Holub and Trevor Parsons, spinning out of academic research in University College Dublin. Its a great service, with a decent free tier (5GB per month and 7 day retention).

At KillBiller, we run a Python backend system to support cost calculations, and this runs on the Amazon Elastic Beanstalk platform, which simplifies scaling, deployment, and database management. The KillBiller backend uses a Python Flask application to serve http requests that come from our user-facing mobile app.

Setting up automatic logging from Elastic Beanstalk (and python) to Logentries proved more difficult than expected. I wanted the automatically rotated logs from the Elastic Beanstalk application uploaded to Logentries. This is achieved by:

- Setting up your Amazon Elastic Beanstalk (EB) application to “rotate logs” through S3.

- Using AWS Lambda to detect the upload of logs from EB and trigger a script. Logentries provide a tutorial for this.

- Use the lamda script to upload logs from S3 directly to Logentries. (this is where the trouble starts).

The problem encountered is that Amazon places a single GZIP compressed file in your S3 bucket during log rotation. The lambda script provided by Logentries will only work with text files.

With only some slight changes, we can edit the script to take the gzip file from S3, unzip to a stream, and using the Python zlib and StringIO libraries, turn this back to normal text for Logentries ingestion. The full edited script is available here, and the parts that have changed from the original version are:

...

import zlib

import StringIO

...

# Get object from S3 Bucket

response = s3.get_object(Bucket=bucket, Key=key)

body = response['Body']

# Read data from object, at this point this is compressed zip binary format.

compressed_data = body.read()

s.sendall("Successfully read the zipped contents of the file.")

# Use zlib library to decompress this binary string

data = zlib.decompress(compressed_data, 16+zlib.MAX_WBITS)

s.sendall("Decompressed data")

# Now continue script as normal to send data to Logentries.

for token in tokens:

s.sendall('%s %s\n' % (token, "username='{}' downloaded file='{}' from bucket='{}' and uncompressed!."

...

In terms of other steps, here’s the key points:

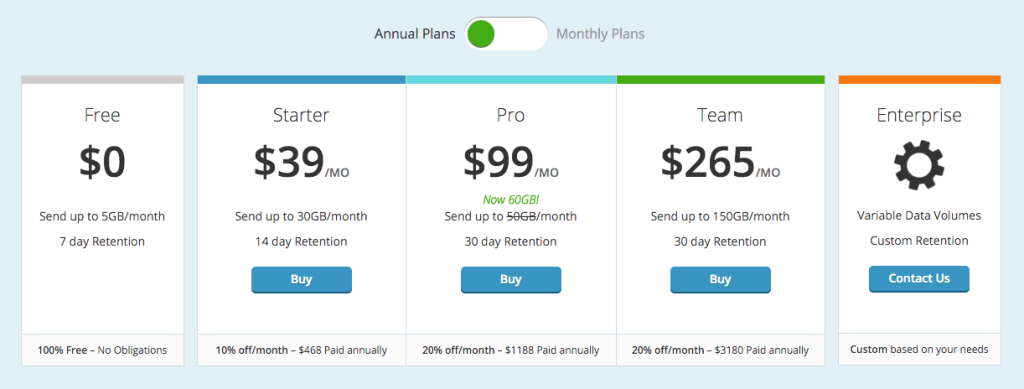

1. Turn on Log Rotation for your Elastic Beanstalk Application

This setting is found under “Software Configuration” in the Configuration page of your Elastic Beanstalk application.

At regular intervals, Amazon will collect logs from every instance in your application, zip them, and place them on an Amazon S3 bucket, under different folders, in my case:

/elasticbeanstalk-eu-west-1-<account number>/resources/environments/logs/publish/<environment id>/<instance id>/<log files>.gz

2. Set up AWS Lambda to detect log changes and upload to Logentries

To complete this step, follow the instructions as laid out by Logentries themselves at this page. The only changes that I think are worth making are:

- Instead of the “le_lambda.py” file that Logentries provide, use the slightly edited version I have here. You’ll still need the “le_certs.pem” file and need to zip them together when creating your AWS Lambda task.

- When configuring the event sources for your AWS Lambda job, you can specify a Prefix for the notifications, ensuring that only logs for a given application ID are uploaded, rather than any changes to your S3 bucket (such as manually requested logs). For example:

resources/environments/logs/publish/e-mpcwnwheky/ - If you have other Lambda functions (perhaps the original le_lambda.py) being used for non-zipped files, you can use the “suffix” filter to only trigger for “.gz” files.

Now your logs should flow freely to Logentries; get busy making up some dashboards, tags, and alerts.